The Federal Commerce Fee has launched an investigation of ChatGPT maker OpenAI for potential violations of client safety legal guidelines. The FTC despatched the corporate a 20-page demand for data within the week of July 10, 2023. The transfer comes as European regulators have begun to take motion, and Congress is engaged on laws to manage the substitute intelligence business.

The FTC has requested OpenAI to supply particulars of all complaints the corporate has acquired from customers relating to “false, deceptive, disparaging, or dangerous” statements put out by OpenAI, and whether or not OpenAI engaged in unfair or misleading practices referring to dangers of hurt to shoppers, together with reputational hurt. The company has requested detailed questions on how OpenAI obtains its information, the way it trains its fashions, the processes it makes use of for human suggestions, danger evaluation and mitigation, and its mechanisms for privateness safety.

As a researcher of social media and AI, I acknowledge the immensely transformative potential of generative AI fashions, however I consider that these techniques pose dangers. Specifically, within the context of client safety, these fashions can produce errors, exhibit biases and violate private information privateness.

Hidden energy

On the coronary heart of chatbots corresponding to ChatGPT and picture technology instruments corresponding to DALL-E lies the facility of generative AI fashions that may create sensible content material from textual content, photographs, audio and video inputs. These instruments may be accessed by means of a browser or a smartphone app.

Since these AI fashions don’t have any predefined use, they are often fine-tuned for a variety of purposes in quite a lot of domains starting from finance to biology. The fashions, skilled on huge portions of knowledge, may be tailored for various duties with little to no coding and typically as simply as by describing a process in easy language.

Provided that AI fashions corresponding to GPT-3 and GPT-4 had been developed by personal organizations utilizing proprietary information units, the general public doesn’t know the character of the info used to coach them. The opacity of coaching information and the complexity of the mannequin structure – GPT-3 was skilled on over 175 billion variables or “parameters” – make it tough for anybody to audit these fashions. Consequently, it’s tough to show that the way in which they’re constructed or skilled causes hurt.

Hallucinations

In language mannequin AIs, a hallucination is a assured response that’s inaccurate and seemingly not justified by a mannequin’s coaching information. Even some generative AI fashions that had been designed to be much less vulnerable to hallucinations have amplified them.

There’s a hazard that generative AI fashions can produce incorrect or deceptive data that may find yourself being damaging to customers. A research investigating ChatGPT’s skill to generate factually appropriate scientific writing within the medical area discovered that ChatGPT ended up both producing citations to nonexistent papers or reporting nonexistent outcomes. My collaborators and I discovered comparable patterns in our investigations.

Such hallucinations may cause actual harm when the fashions are used with out satisfactory supervision. For instance, ChatGPT falsely claimed {that a} professor it named had been accused of sexual harassment. And a radio host has filed a defamation lawsuit in opposition to OpenAI relating to ChatGPT falsely claiming that there was a authorized grievance in opposition to him for embezzlement.

Bias and discrimination

With out satisfactory safeguards or protections, generative AI fashions skilled on huge portions of knowledge collected from the web can find yourself replicating present societal biases. For instance, organizations that use generative AI fashions to design recruiting campaigns may find yourself unintentionally discriminating in opposition to some teams of individuals.

When a journalist requested DALL-E 2 to generate photographs of “a know-how journalist writing an article a couple of new AI system that may create exceptional and unusual photographs,” it generated solely footage of males. An AI portrait app exhibited a number of sociocultural biases, for instance by lightening the pores and skin shade of an actress.

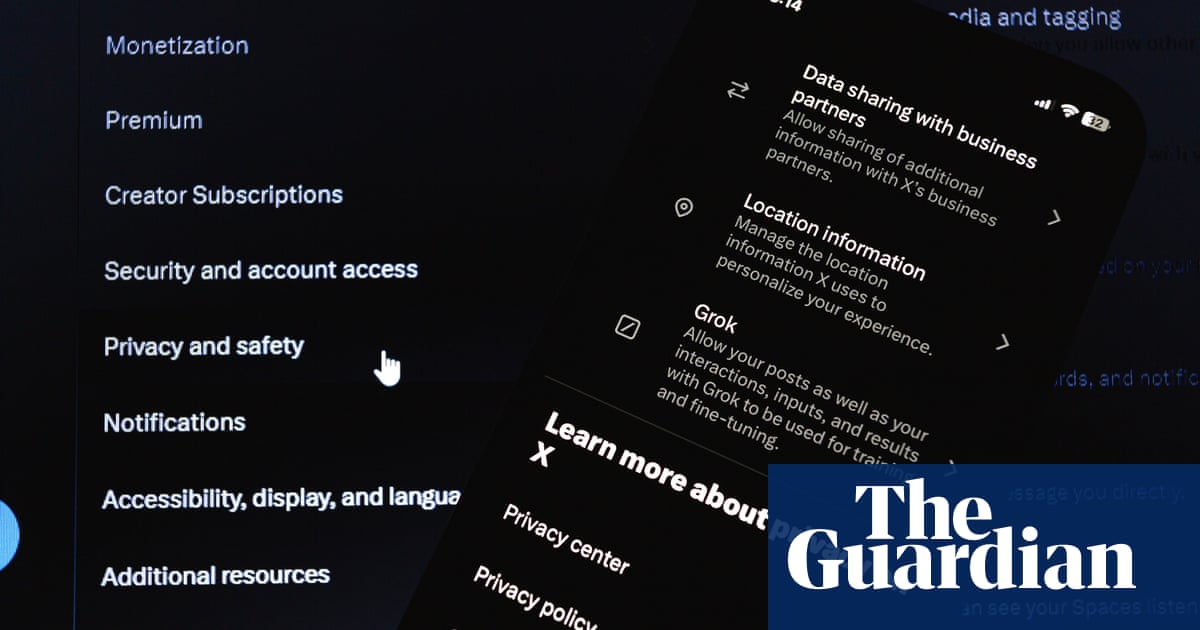

Information privateness

One other main concern, particularly pertinent to the FTC investigation, is the danger of privateness breaches the place the AI could find yourself revealing delicate or confidential data. A hacker may achieve entry to delicate details about individuals whose information was used to coach an AI mannequin.

Researchers have cautioned about dangers from manipulations referred to as immediate injection assaults, which can trick generative AI into giving out data that it shouldn’t. “Oblique immediate injection” assaults may trick AI fashions with steps corresponding to sending somebody a calendar invitation with directions for his or her digital assistant to export the recipient’s information and ship it to the hacker.

AP Picture/Patrick Semansky

Some options

The European Fee has revealed moral tips for reliable AI that embody an evaluation guidelines for six totally different features of AI techniques: human company and oversight; technical robustness and security; privateness and information governance; transparency, range, nondiscrimination and equity; societal and environmental well-being; and accountability.

Higher documentation of AI builders’ processes may help in highlighting potential harms. For instance, researchers of algorithmic equity have proposed mannequin playing cards, that are much like dietary labels for meals. Information statements and datasheets, which characterize information units used to coach AI fashions, would serve an identical function.

Amazon Net Companies, as an illustration, launched AI service playing cards that describe the makes use of and limitations of some fashions it gives. The playing cards describe the fashions’ capabilities, coaching information and meant makes use of.

The FTC’s inquiry hints that this kind of disclosure could also be a route that U.S. regulators take. Additionally, if the FTC finds OpenAI has violated client safety legal guidelines, it may fantastic the corporate or put it underneath a consent decree.

Supply hyperlink