Meta, proprietor of Fb and Instagram, introduced main adjustments to its insurance policies on digitally created and altered media on Friday, earlier than elections poised to check its potential to police misleading content material generated by synthetic intelligence applied sciences.

The social media large will begin making use of “Made with AI” labels in Could to AI-generated movies, photographs and audio posted on Fb and Instagram, increasing a coverage that beforehand addressed solely a slim slice of doctored movies, the vice-president of content material coverage, Monika Bickert, mentioned in a blogpost.

Bickert mentioned Meta would additionally apply separate and extra distinguished labels to digitally altered media that poses a “notably excessive danger of materially deceiving the general public on a matter of significance”, no matter whether or not the content material was created utilizing AI or different instruments. Meta will start making use of the extra distinguished “high-risk” labels instantly, a spokesperson mentioned.

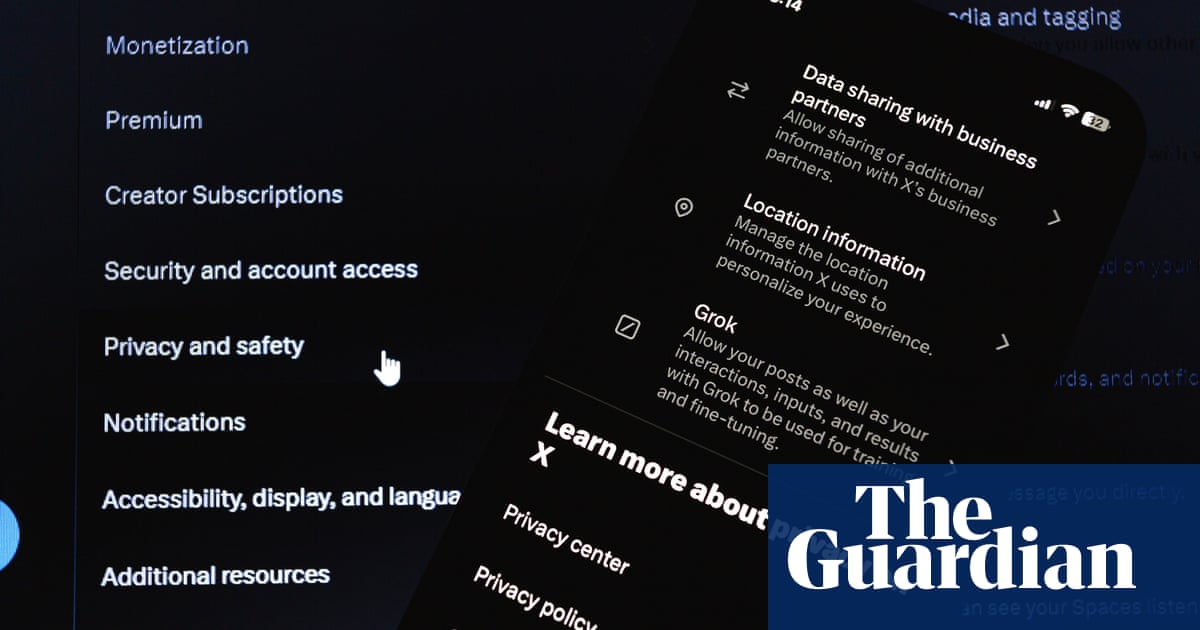

The method will shift the corporate’s therapy of manipulated content material, shifting from a give attention to eradicating a restricted set of posts towards preserving the content material up whereas offering viewers with details about the way it was made.

Meta beforehand introduced a scheme to detect photographs made utilizing different firms’ generative AI instruments by utilizing invisible markers constructed into the information, however didn’t give a begin date on the time.

An organization spokesperson mentioned the labeling method would apply to content material posted on Fb, Instagram and Threads. Its different providers, together with WhatsApp and Quest virtual-reality headsets, are lined by completely different guidelines.

The adjustments come months earlier than a US presidential election in November that tech researchers warn could also be reworked by generative AI applied sciences. Political campaigns have already begun deploying AI instruments in locations like Indonesia, pushing the boundaries of tips issued by suppliers like Meta and generative AI market chief OpenAI.

In February, Meta’s oversight board referred to as the corporate’s present guidelines on manipulated media “incoherent” after reviewing a video of Joe Biden posted on Fb final yr that altered actual footage to wrongfully recommend the US president had behaved inappropriately.

The footage was permitted to remain up, as Meta’s present “manipulated media” coverage bars misleadingly altered movies provided that they have been produced by synthetic intelligence or in the event that they make folks seem to say phrases they by no means really mentioned.

The board mentioned the coverage must also apply to non-AI content material, which is “not essentially any much less deceptive” than content material generated by AI, in addition to to audio-only content material and movies depicting folks doing issues they by no means really mentioned or did.

Supply hyperlink