Greater than 140 Fb content material moderators have been identified with extreme post-traumatic stress dysfunction brought on by publicity to graphic social media content material together with murders, suicides, youngster sexual abuse and terrorism.

The moderators labored eight- to 10-hour days at a facility in Kenya for an organization contracted by the social media agency and have been discovered to have PTSD, generalised nervousness dysfunction (GAD) and main depressive dysfunction (MDD), by Dr Ian Kanyanya, the pinnacle of psychological well being companies at Kenyatta Nationwide hospital in Nairobi.

The mass diagnoses have been made as a part of lawsuit being introduced in opposition to Fb’s guardian firm, Meta, and Samasource Kenya, an outsourcing firm that carried out content material moderation for Meta utilizing staff from throughout Africa.

The pictures and movies together with necrophilia, bestiality and self-harm brought on some moderators to faint, vomit, scream and run away from their desks, the filings allege.

The case is shedding gentle on the human value of the increase in social media use lately that has required increasingly moderation, usually in a few of the poorest components of the world, to guard customers from the worst materials that some folks publish.

Not less than 40 of the moderators within the case have been misusing alcohol, medicine together with hashish, cocaine and amphetamines, and medicine corresponding to sleeping tablets. Some reported marriage breakdown and the collapse of need for sexual intimacy, and dropping reference to their households. Some whose job was to take away movies uploaded by terrorist and insurgent teams have been afraid they have been being watched and focused, and that in the event that they returned house they’d be hunted and killed.

Fb and different giant social media and synthetic intelligence corporations depend on armies of content material moderators to take away posts that breach their group requirements and to coach AI techniques to do the identical.

The moderators from Kenya and different African international locations have been tasked from 2019 to 2023 with checking posts emanating from Africa and in their very own languages however have been paid eight instances lower than their counterparts within the US, based on the declare paperwork.

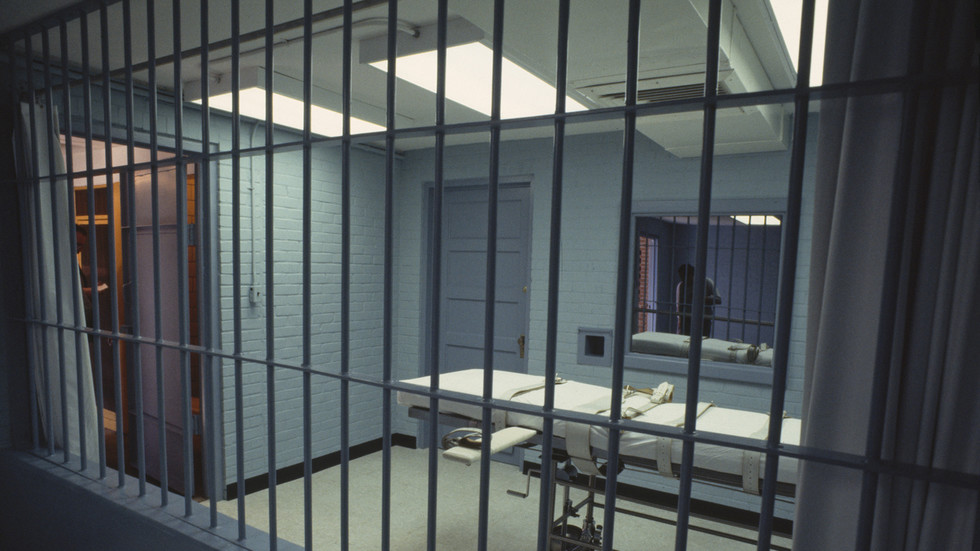

Medical experiences filed with the employment and labour relations court docket in Nairobi and seen by the Guardian paint a horrific image of working life contained in the Meta-contracted facility, the place staff have been fed a relentless stream of photos to examine in a chilly warehouse-like house, beneath vivid lights and with their working exercise monitored to the minute.

Nearly 190 moderators are bringing the multi-pronged declare that features allegations of intentional infliction of psychological hurt, unfair employment practices, human trafficking and trendy slavery and illegal redundancy. All 144 examined by Kanyanya have been discovered to have PTSD, GAD and MDD with extreme or extraordinarily extreme PTSD signs in 81% of instances, principally at the least a 12 months after they’d left.

Meta and Samasource declined to touch upon the claims due to the litigation.

Martha Darkish, the founder and co-executive director of Foxglove, a UK-based non-profit organisation that has backed the court docket case, stated: “The proof is indeniable: moderating Fb is harmful work that inflicts lifelong PTSD on virtually everybody who moderates it.

“In Kenya, it traumatised 100% of a whole bunch of former moderators examined for PTSD … In some other trade, if we found 100% of security staff have been being identified with an sickness brought on by their work, the folks accountable could be pressured to resign and face the authorized penalties for mass violations of individuals’s rights. That’s the reason Foxglove is supporting these courageous staff to hunt justice from the courts.”

In keeping with the filings within the Nairobi case, Kanyanya concluded that the first reason for the psychological well being circumstances among the many 144 folks was their work as Fb content material moderators as they “encountered extraordinarily graphic content material every day, which included movies of ugly murders, self-harm, suicides, tried suicides, sexual violence, specific sexual content material, youngster bodily and sexual abuse, horrific violent actions simply to call just a few”.

4 of the moderators suffered trypophobia, an aversion to or worry of repetitive patterns of small holes or bumps that may trigger intense nervousness. For some, the situation developed from seeing holes on decomposing our bodies whereas engaged on Fb content material.

Moderation and the associated process of tagging content material are sometimes hidden components of the tech increase. Related, however much less traumatising, preparations are made for outsourced staff to tag lots of photos of mundane issues corresponding to avenue furnishings, dwelling rooms and highway scenes so AI techniques designed in California know what they’re .

Meta stated it took the assist of content material reviewers significantly. Contracts with third-party moderators of content material on Fb and Instagram detailed expectations about counselling, coaching and round the clock onsite assist and entry to personal healthcare. Meta stated pay was above trade requirements within the markets the place they operated and it used methods corresponding to blurring, muting sounds and rendering in monochrome to restrict publicity to graphic materials for individuals who reviewed content material on the 2 platforms.

Supply hyperlink